Measuring What Matters and Doing it Well: Innovating Assessment in the AI Era

From Speed Traps to GPS

Standardized tests too often function like speed traps; backward-looking conclusions offering no course correction. In contrast, students need a GPS for learning—a system providing practical guidance and navigation, not just auditing.

Society increasingly prioritizes skills for the future (such as creative problem-solving, collaboration, and AI literacy), but still uses 20th-century bubble sheets that cannot measure them. As AI usage begins to reshape economies and labor markets, the gap between our emerging educational goals and our legacy measurement tools risks growing into a chasm. It is time to embrace assessment in the service of learning—a paradigm where tests don’t just measure what a student knows, but actively provide useful insights and feedback.

Measuring the Wrong Things

As James Pellegrino argues, we must shift our focus to ‘measure what matters, not just what is easy,’ designing to capture the complex, multi-dimensional skills essential in the AI era. Employers report that graduates lack critical thinking, communication, and collaborative problem-solving skills. Meanwhile, tests offer static snapshots; they capture a final answer but miss the process.

When a student bubbles “C”, is it understanding, memorization, or a guess? Is a wrong answer a knowledge gap or a simple calculation error?

Compounding this, “snapshot” testing creates a backwash. Because what gets measured gets valued, rote testing risks narrowing classroom focus. Assessments must instead demand—and reward—creative, even inventive, problem-solving.

Five Design Principles

Assessment innovation is both desirable and possible. Natalie Foster and Mario Piacentini’s recent chapter, Innovating Assessment Design to Better Measure and Support Learning, proposes five design principles to transform assessments from static audits into dynamic learning experiences.

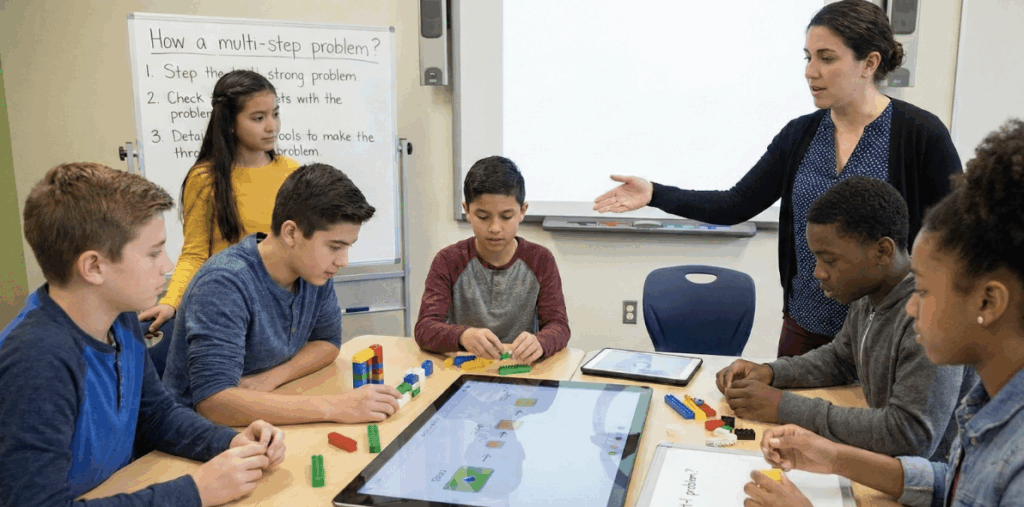

1. Using Extended Performance Tasks (Make it Real). We need assessments that replicate “the key features of those educational experiences where deeper learning happens“. Students can demonstrate their preparation for future learning by completing extended performance tasks where they explore the problem space, iterate, and use resources.

2. Accounting for Students’ Prior Knowledge (Context is Key). Skills like critical or creative thinking do not exist in a vacuum; they depend heavily on what you know. A student might be creative when it comes to writing fantasy stories but then fail to suggest possible solutions for the acidification of oceans. We must assess students’ thinking skills across multiple knowledge domains, and design resource-rich task environments where performance is not so dependent on a specific piece of knowledge.

3. Providing Opportunities for Productive Failure (The Value of Meaningful Struggle). Real-world success often follows failure, yet tests too often punish mistakes. Research shows invention activities (including grappling with concepts before instruction) improve learning. Similarly, assessments that allow for productive failure – exposing students to questions and response formats they have not extensively practiced with – are more likely to measure if they can transfer what they have learnt to new problems.

4. Providing Feedback and Instructional Support (The Test That Contributes). Assessments should act less like a silent judge and more like an engaged tutor. Technology can now offer hints when a learner is stuck. Emerging AI solutions can turn the test into a conversation, generating better data on learning potential: does a student give up when it fails? Or rather, asks for a hint and acts on it?

5. Designing “Low Floor, High Ceiling” Tasks (Design for Adaptivity). Fair assessments act like playgrounds: a “low floor” for access, and a “high ceiling” to challenge the most advanced. The adaptive design and open solution space captures the “cutting edge” of every student’s ability.

Proof of Concept: It’s Already Happening

This vision of better measures that support learning is not science fiction; it is being built today.

The OECD’s PILA platform is a large library of engaging, formative assessment tasks that provide real-time feedback to students and teachers. The PISA 2025 Learning in the Digital World’s simulations will soon provide global data on how prepared students are to learn to do new things through exploration and iteration.

Addressing the Skeptics

Critics will point to the challenges: cost, time, and reliability. Developing and validating interactive tasks requires investment, but open-source solutions make task templates reusable and transferable across subjects and borders. When well designed, interactive performance tasks can provide many observation points into students’ reasoning, increasing reliability. We don’t need to replace every test overnight; traditional tests still have value. But we must diversify the portfolio. We cannot afford not to invest in better data. The cost of failing to prepare rising generations for the complexity of the AI era is far higher than the cost of upgrading our measurement tools.

By Way of Conclusion

Natalie Foster and Mario Piacentini’s new case study suggests assessment can leverage these principles to serve as a linchpin that connects teaching to learning.

As Andreas Schleicher argues, “there is much work yet to be done, and it will require the convergence of political, financial and intellectual capitals to bring these ideas to scale.”

Policymakers must create space for pilots, funders must back ambitious open-source tasks and platforms, and educators must demand tools that honor their students’ complexity.

Imagine a student finishing a test feeling more capable. To assess is, intrinsically, to teach and to learn. It is time to build the GPS our students deserve.

Read Natalie and Mario’s full case study: Innovating Assessment Design to Better Measure and Support Learning

Mario Piacentini

0 Comments

Leave a Comment

Your email address will not be published. All fields are required.