Curbing Killer Robots And Other Misuses of AI

Code that learns is aiding every aspect of life. We can look forward to more convenience, less disease, and safer and cheaper transportation. But artificial intelligence is moving faster than public policy. In addition to job dislocation, it’s time to make plans to curb misuse of AI including discrimination, autonomous weapons, and excessive surveillance.

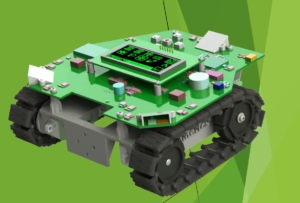

Killer Robots. AlphaPilot is an open innovation challenge focused on AI for autonomous drone systems sponsored by Lockheed Martin. The $2 million purse adds to the excitement about the challenge.

The flip side is that drones are being weaponized and are changing the character of warfare. A fleet of cheap drones poses a threat to individual targets and big targets like ships and bases. With more autonomy, these weapons will pose an even greater threat.

The Campaign to Stop Killer Robots is an NGO formed in 2012 to counter the threat of machines gone bad. The campaign, backed by Tesla’s Elon Musk and Alphabet’s Mustafa Suleyman, seeks to ban such machines outright.

The United Nations is scrambling to get ahead of this threat. As chair of the United Nations’ Convention on Conventional Weapons, Amandeep Gill has the difficult task of leading 125 member states through discussions on the thorny technical and ethical issue of “killer robots” — military robots that can engage targets independently.

The Future of Life Institute, a nonprofit devoted to mitigating existential risks, launched a sensational short film Slaughterbots which depicts a dystopian near-future menaced by homicidal drones. On a tamer note, the Future of Life Institute has an informative podcast on autonomous weapons and encourages supporters to sign a pledge.

Gill doesn’t find sensationalism much help but admits that it’s getting hard to determine when and how humans are in charge. His focus is on a set of protocols that could limit deployment of systems that make decisions about killing humans.

Kenneth Payne, King’s College, warns “We are only just beginning to work through the potential impact of artificial intelligence on human warfare, but all the indications are that they will be profound and troubling, in ways that are both unavoidable and unforeseeable.”

Discrimination. As more machines make more judgments, we’re seeing old biases baked into decisions about facial recognition, criminal sentencing, and mortgage approvals.

“Algorithmic bias is shaping up to be a major societal issue at a critical moment in the evolution of machine learning and AI,” said the MIT Tech Review. “If the bias lurking inside the algorithms that make ever-more-important decisions goes unrecognized and unchecked, it could have serious negative consequences, especially for poorer communities and minorities.”

The more insipid self-imposed threat of discrimination is our own media feed trained by a series of selections and swipes that increasingly steers each of us into our own information gully. If Barack Obama was the first president elected by social media, Donald Trump was the first president elected by algorithmic bias (learned biases amplified by artificial reinforcement).

Surveillance. Chinese municipalities are using facial recognition technology and AI to clamp down on crime. Home to more than 200 million surveillance cameras China is building an unprecedented national surveillance system.

One company leading the use of AI in facial recognition is SenseTime, an Alibaba-based startup (valued at $4.5 billion). They claim that Guangzhou’s public security bureau has identified more than 2,000 crime suspects since 2017 with the help of the technology.

China is also rolling out a “social credit system” that tracks the activities of citizens to rank them with scores that can determine whether they can be barred from accessing everything from plane flights to certain online dating services.

A majority of hedge funds are now using artificial intelligence and machine learning to help them make trades. This has all made markets faster, more efficient and more accessible to online investors. However, Simone Foxman, a reporter for Bloomberg said, “The kinds of data that the really high-end firms are using isn’t as anonymized as you might think.” And for some investors, “there’s a lot of information about you that can be traced to you specifically.”

AI is boosting the amount and type of information being tracked about every person. It’s linking data and making inferences about each of us in programmed and unforeseen ways.

Moving Toward Beneficial AI: The Role of Education

China is planning for global dominance in AI–a State Council set that goal last year. The speed with which they built high-speed rail and rolled out 66,000 business incubators two years after launching an innovation campaign suggests they are addressing AI with similar mobilization and agility.

The planned economy will deal with ethical issues associated with AI in a centralized fashion. We in the West may not like some of the answers (like excessive surveillance) but they will deal efficiently with issues as they arise. The West, particularly the United States, will go through a much messier process of what Future of Life Institute calls AI alignment.

There are four things that leading schools and nonprofits are doing to lead the community response to the emerging questions of AI ethics:

1. Lead community conversations. Like the 200 districts that have used the Battelle Portrait of a Graduate process, schools can host community conversations about what’s happening, what it means, and how to prepare.

2. Introduce AI to secondary school students. This fall, middle schools students in the Montour School District (Pittsburgh) gained access to a new program with a myriad of opportunities to explore and experience AI, using it to cultivate, nurture, and enhance initiatives aimed at increasing the public good.

Montour uses AI and coding tools from ReadyAI which organizes the annual World AI Competition for Youth (“WAICY”) competition on Carnegie Mellon University’s campus.

AI4All is a national nonprofit that connects underrepresented high school students with mentors from leading university computer science programs.

3. Teach coding and computational (and design) thinking. South Fayette School District (Pittsburgh) integrates computational thinking from kindergarten through high school. A growing number of schools, including Design Tech High in Redwood City, incorporate design thinking across the curriculum.

4. Integrate questions of AI ethics into secondary social studies. Energy Institute High (Houston), incorporates questions of the ethics of AI and robots into projects.

The American response to killer robots and the misuse of AI isn’t likely to be formulated by the federal government, it will emerge from community conversations and collective action. Schools can play an important role in exposing young people to the opportunities and challenges of exponential technology and participating in local and regional ethics dialogs.

For more, see:

- Montour School District: America’s First Public School AI Program

- Let’s Talk About AI Ethics; We’re On a Deadline

- AI4All Extends The Power of Artificial Intelligence to High School Girls

- 32 Ways AI is Improving Education

- Six Experts Explain the Killer Robots Debate (Future of Life Podcast)

This post was originally published on Forbes.

Stay in-the-know with all things edtech and innovations in learning by signing up to receive the weekly Smart Update.

Tom Vander Ark

Another informative post on the risk of armed drones https://www.army-technology.com/features/autonomous-delivery-drones-military-logistics/

Ayush Srivastava

AI is the future and it will be going to be the next big thing.

aversanlabs

Thanks for sharing this article, nice and well-written article.