Cameras Everywhere: The Ethics of Eyes in the Sky

Pictures from people’s houses can predict the chances of that person getting into a car accident. The researchers that created the system acknowledged that “modern data collection and computational techniques…allow for unprecedented exploitation of personal data, can outpace development of legislation and raise privacy threats.”

Hong Kong researchers created a drone system that can automatically analyze a road surface. It suggests that we’re approaching the era of automated surveillance for civil and military purposes.

In lower Manhattan, police are planning a surveillance center where officers can view thousands of video cameras around the downtown.

Microsoft turned down the sale of facial recognition software to California law enforcement arguing that innocent women and minorities would be disproportionately held for questioning. It suggests that the technology is running ahead of public policy but not ready for equitable use.

And speaking of facial recognition, Jet Blue has begun using it in lieu of boarding passes on some flights much to the chagrin of some passengers who wonder when the gave consent for this application and who has access to what biometric data.

CalTech researchers published a dataset that monitors wildlife across the American Southwest. It suggests that machines will automatically analyze changes in the environment for us.

Green Bay police have been taking advantage of Ring doorbell videos to solve crimes, they call it “Facebook meets neighborhood watch.

Last month insurtech startup Hippo cut a deal with GIA Maps so now, in addition to having monitors inside your house, they can monitor your house and neighborhood from the sky (and text you that your homeowners insurance rates just went up because of your new addition and your area just became more fire-prone).

In December AI Now reported on a subclass of facial recognition that supposedly measures your affect with claims that it can detect your true personality, your inner feelings and even your mental health based on images or video of your face. AI Now warned against using these tools for hiring or access to insurance or policing.

Most of these are US examples, but surveillance is a worldwide movement. By 2020, there will be more than 30 million connected devices including almost a billion cameras.

These 10 examples illustrate, we’ve entered an era of high surveillance with an emerging set of benefits and threats.

It’s time to talk

The ACLU thinks blanketing streets with cameras is a bad idea because video surveillance has not been proven effective, is susceptible to abuse and will lead to a chilling effect on public life.

Joy Buolamwini, of the MIT Media Lab, launched the Algorithmic Justice League to highlight and alleviate bias from AI systems. In particular, Buolamwini’s work has focused on the inability of facial recognition systems to accurately identify people of color.

Even the folks that make the tech say law enforcement shouldn’t base their decisions to detain suspects or extend prison terms entirely on artificial intelligence because of its flaws and biases. Last month, Partnership on AI (tech giants plus ACLU) published a report urging caution and concluded, “these tools should not be used alone to make decisions to detain or to continue detention.”

Next Steps

Don’t get me wrong, I’m excited about the potential benefits of safe streets and cheaper insurance. But it’s time to pump the breaks on 24/7 surveillance and put a few agreements in place. Here are four next steps.

Cities and counties running closed circuit TV should be transparent where and why surveillance is used, when videos are recorded, how long are they retained and under what conditions they are shared with other government agencies and what punishments would apply to violators. (The EU already has relevant policies.)

Second, we need national agreements on when and how facial recognition will be used–and it has to get better for all groups before it’s used in security application.

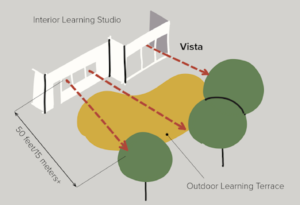

Third, schools need to add video to digital literacy education and engage students in exploring the ethics of 24/7 surveillance in a course on exponential technology (see school examples and a middle school ethics course from MIT).

Finally, and perhaps most challenging, it’s time for companies, trade groups and standards bodies to set agreements about the diversity and transparency in AI development and deployment–especially in surveillance.

For more see

- Why Every High School Should Require an AI Course

- Why Social Studies is Becoming AI Studies

- Let’s Talk About AI Ethics; We’re On a Deadline

- Curbing Killer Robots And Other Misuses of AI

- Getting Started with AI: Resources For You and Your Community

Stay in-the-know with innovations in learning by signing up for the weekly Smart Update.

This post was originally published on Forbes.

Tom Vander Ark

San Francisco banned the use of facial recognition by police and other agencies https://www.nytimes.com/2019/05/14/us/facial-recognition-ban-san-francisco.html

Tom

add smart drones that can chase objects (or people) to the list of stuff to be worried about https://arxiv.org/abs/1906.02919