The Impact of ESSA: Identifying Evidence-Based Resources

In 2015, America implemented the Every Student Succeeds Act (ESSA), what might be the greatest shift in federal education law since the passage of No Child Left Behind (NCLB) in 2001. While the law was passed three years ago, there is a profound sense of urgency that has prompted states across the country to increase their efforts around implementation. ESSA requires all Title I schools to use “evidence-based” learning practices based on redefined “best practices” that spark measurement accountability across the nation.

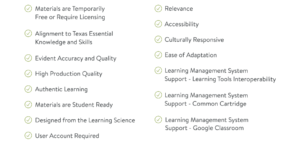

It is crucial that all educators understand not only how the law defines “evidence-based,” but also how to apply that definition to the decision-making process at all levels when vetting learning resources that align to parameters outlined within ESSA,.

While ESSA and NCLB are both aimed at improving student outcomes, ESSA puts a greater emphasis on efficacy research in place of NCLB’s focus on inputs. ESSA raises the bar on what qualifies as “evidence-based” educational activity, while providing more flexibility to states and schools in how they capture and demonstrate proficiency. ESSA focuses on evidence-based vs. research-based. It’s all about showing results rather than focusing on inputs.

And, while the ESSA standards specify what evidence-based means, states determine how to get there. This autonomy is something that creates an increased opportunity for districts to more intentionally serve the communities where they reside.

Four Levels of Evidence

ESSA defines evidence-based according to the type of study conducted, not necessarily the strength of the study results. Those levels are:

- Strong Evidence. A well designed and implemented experimental or randomized control trial.

- Moderate Evidence. At least one quasi-experimental study.

- Promising Evidence. At least one correlational study with statistical controls for selection bias.

- Demonstrates a Rationale. Relevant research or evaluation showing that the product will likely improve student outcomes; still needs other support that it has a favorable effect.

Because there is no official approval process for evidence-based solutions and no federal reviews, it’s crucial that schools and districts are asking the right questions of companies and providers about their research and findings.

Curriculum Associates recently released a new white paper titled ESSA and Evidence Claims: A Practical Guide to Understanding What “Evidence-Based” Really Means that helps district leaders understand how to analyze and apply education research in making the most effective strategic decisions for their districts. They suggest asking:

- When was the study conducted? As we all know, the education landscape is constantly evolving. Many things can change, such as educational standards or instructional methods, as well as your expectations around how best to assess students — any one of which might make the study out-of-date.. To make sure the the research is still relevant, be sure to ask yourself how it relates to your current situation.

- How large was the sample size for the study? It’s so important to know that the student body was well represented in the study. Did it include a diverse set of students? What about sub groups? Make sure the student demographics represented mirror the students in your district.

- Was the study based on current content and standards? It’s possible that even a Level 1 study might be based on older standards or standards from another state. Recency and relevance both matter. Make sure the study addresses your state’s current standards and is highly relevant to the types of programs and interventions you’re implementing.

- What do the results say? That is, were they favorable? Ok, this one is really two questions in one: were the results statistically significant and were they positive? Effect size is a common way of measuring the strength of the impact from an educational intervention and can help you understand how well the program might work for your district overall and in comparison to others..

- Does the product help students? This is perhaps the most important and most obvious question. Studies are naturally very clinical, but when we talk about efficacy, keep in mind the students you’re serving. Can it really help them show growth and proficiency? Does it have the favorable impact you’re looking for and can the program be replicated in your district?

How this works on the ground. A district’s perspective:

On a recent webinar with District Administration, Dr. John Lovato, Assistant Superintendent of Educational Services for the Rosemead School District shared his process for vetting and deciding on a program.

Rosemead is located in southern California and serves preschool-8th grade students. Approximately 35% of students are English language learners and 85% of students are considered “disadvantaged”, qualifying Rosemead as a high need district.

When looking for a program, Dr. Lovato starts with relevance. He wants to make sure his students will be well served. He also looks at evidence beyond the data and will often call neighboring districts that have used the program to get their take and learn from their successes and challenges.

Lastly, Dr. Lovato looks into the resources needed to implement with fidelity. Programs are often dependent on facilitator abilities and training available. Can you support and sustain the program for several years? Can you provide the ongoing coaching and professional development necessary to fully implement the program?

Dr. Lovato’s team tends to focus on programs meeting ESSA levels two and three. He recommends asking the right questions about evidence claims. For example, it’s important to ask questions about the research conditions as some studies are done under idealized conditions which are not reflective of actual concerns and considerations of implementing a program in a real district, at scale, over a sustained period of time.

Get Stakeholders Involved.

Everyone from educators to district administrators should be involved in your decision to bring on a new program. The HR department at your district needs to be aware, and strategic in recognizing the professional learning that will be required for successful onboarding and ongoing support.

Curriculum leads, in partnership with other departments within the learning systems, are in the trenches of this work. With momentum around educating the whole child and additional resources being aligned to students’ social, emotional, and academic development, districts couple their curriculum selections with holistic needs of their students and provide support to all members of the school community as they implement.

This collaborative process, with added emphasis on diversity of voice and perspective, partnered with evidence based selection will create a platform for student growth to soar.

For more, see:

- ESSA and Evidence Claims: A Practical Guide to Understanding What “Evidence-Based” Really Means

- New NWEA Chief Chris Minnich on the Future of Assessment

- What to Expect When You’re Expecting…The Every Student Succeeds Act

- Smart Series Guide to EdTech Procurement

Stay in-the-know with all things EdTech and innovations in learning by signing up to receive the weekly Smart Update. This post includes mentions of a Getting Smart partner. For a full list of partners, affiliate organizations and all other disclosures please see our Partner page.

0 Comments

Leave a Comment

Your email address will not be published. All fields are required.